In a recently published report from the National Academy of Science titled “Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis”, data that are left unchecked and not properly analyzed has the potential to incorporate and perpetuate economic and social biases that already contribute to healthcare disparities, especially in situations and conditions with complex trade-offs and outcomes with high uncertainty.

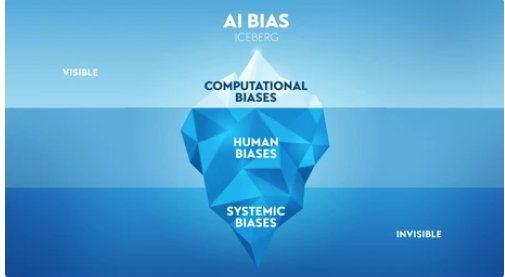

For instance, if patients with low income tend to do worse when receiving treatment, DL (Deep Learning)-systems could recommend against treating them, as they learn from the data characteristics used to trained the system. Social biases that contribute to healthcare disparities that are left without rigorous analysis in the implementation phase of the algorithm can contribute to decisions and systems that perpetrate them, such as the example presented above. Furthermore, they can be amplified by extrapolating those decisions to other institutions or settings in which the system might be deployed DL-systems pose the risk to automatize and make biases invisible that are otherwise well known if rigorous analysis of data used to train the system is not in place, and the DL-system decisions are accepted over our moral and knowledge-guided intuition.

As healthcare technologies rapidly advance, it’s critical we consider the ethical implications in order to steer innovation responsibly. Ethical concerns include:

Privacy and Security of patient data

- Wellness and health app data privacy – Many wellness apps and wearables collect detailed personal health data but have been criticized for opaque data practices and sharing private data with third parties.

- Genetic testing privacy – Direct-to-consumer genetic testing companies retain the DNA data. Privacy questions emerge on how it can be shared, sold, or used without consent.

- Lack of transparency around AI training data – Large AI systems are trained on huge datasets of patient medical records that raise questions around consent and transparency.

- Identifiability of anonymized datasets – Research shows anonymized healthcare data can often be de-anonymized via algorithms, threatening presumed privacy.

- Social stigma from data leaks – If medical conditions are leaked/exposed, it can lead to psychological distress, social stigma, and financial costs for patients.

- Exploitation of health data – Private companies, insurers, employers may use health data in ways that discriminate or penalize vulnerable patients.

- Inadequate informed consent – Patients may not fully understand or consent to how their health data gets used and shared for secondary purposes.

Ethical considerations regarding transparency and bias in AI systems in healthcare:

- Algorithmic accountability – AI systems should be explainable and auditable. Understanding how an algorithm was trained and arrived at a decision is key to ensuring appropriate use.

- Mitigating bias – AI bias stems from imperfect training data reflecting societal biases. Ongoing testing for demographic disparities and unfair outcomes is critical.

- Diverse data – Training datasets should represent diverse populations. Lack of inclusivity leads to biased systems.

- Avoiding opacity – Complex AI models like deep learning can be black boxes. Maximum transparency allows scrutiny.

- Human oversight – Doctors should monitor AI for accuracy and fairness. Humans should have final say in serious patient matters.

- Re-evaluating older AI – As new biases emerge, older AI tools should be re-evaluated before continued deployment in care.

- Patient communication – Patients should understand when and how AI is used in their care for informed consent

- Responsible marketing – AI should not be overhyped or misrepresented by healthcare companies to mislead patients

- Impact on workforce – AI should augment clinicians and enhance their work, not replace human roles that require empathy and nuance

The following case study highlights how emerging technologies require rigorous efficacy testing and ethical evaluation even prior to coming to market in order to strike the right balance between innovation and responsible healthcare.

Liquid Biopsy for Early Cancer Screening

Liquid biopsy is a promising new blood test that screens for early signs of cancer by detecting tumor DNA in the bloodstream. While not yet approved for broad screening use, some private companies already offer direct-to-consumer liquid biopsy tests.

Key Ethical Concerns:

- Unproven reliability – False positives could prompt unnecessary stress and procedures. False negatives could provide false reassurance.

- Creating patient anxiety – Those with positive results may feel distressed but unsure of next steps absent clinical guidance.

- Over testing and over diagnosis – Broad screening of asymptomatic people risks detecting small tumors that may never advance. This can prompt over treatment.

- Limited oversight – Direct-to-consumer tests bypass doctors, limiting informed decision-making on whether test is appropriate for a given patient.

- Widening disparities – Out-of-pocket costs could restrict access along socioeconomic lines until covered by insurance plans.

Overall, AI developers and users have an ethical responsibility to ensure these technologies are designed, tested, and deployed carefully in line with patient privacy rights and well-being. Ongoing scrutiny is required.

The core ethical obligation is to protect patient privacy, build trust, and prevent exploitation or unintended harms from emerging data practices in healthcare. Robust governance, transparency and consent protocols are needed to ensure ethics.

There are still so many open questions on how to build AI that is fair, accountable, and aligned with patient interests. Figuring out responsible governance frameworks and best practices will require collaboration between all stakeholders – technologists, healthcare organizations, clinicians, ethicists, and patients themselves. It’s an evolving conversation, but I believe keeping ethical considerations like the ones outlined here front and center will lead to more trustworthy and beneficent innovations in healthcare AI.

Some suggested key steps various stakeholders should take to optimize the ethics of emerging health technologies:

- Policymakers – Develop regulations and standards that embed ethics principles into the design, testing and use of new technologies.

- Industry – Prioritize patient benefit over profits. Build internal ethics advisory teams. Conduct impact assessments pre and post-launch. Exercise extreme transparency.

- Healthcare organizations – Only implement vetted technologies adhering to ethical guidelines. Structure responsible data sharing protocols.

- Clinicians – Advocate for patients. Voice concerns over technologies that clash with ethical care tenets. Uphold informed consent.

- Patients – Seek education on emerging technologies. Provide feedback on needs, values and concerns as end-users. Participate in policy processes.

- Ethicists – Proactively identify issues posed by innovations. Collaborate on practical frameworks balancing ethics and progress.

- Researchers – Rigorously evaluate safety, efficacy and unintended consequences pre and post-market. Publish transparently.

- Investors – Conduct ethics due diligence and demand accountability from funded startups. Support responsible innovation.

- Media – Provide balanced, evidence-based analyses of benefits and risks. Avoid hype or alarmism.

With collaborative action and shared accountability, we can cultivate health technology ecosystems where ethical considerations are baked into the process, not an afterthought. This will be critical for earning public trust.

Recent Comments